Regulatory Policy in the Age of Artificial Intelligence

FDA Proposes an Agile Regulatory Paradigm for Software Devices

Artificial intelligence (AI) based devices are unique in their ability to learn from real-world use and experience, which can translate to rapid improvement in performance and new applications. In a sign of recognition of this capability, the FDA is proposing a new regulatory framework for review and approval of modifications to these devices after they have been cleared or approved.

Rapid advances in AI are creating new opportunities for software-alone devices, classified as SaMD (Software as a Medical Device) to be commercialized for medical purposes. FDA, under the Food, Drug and Cosmetic Act (FD&C Act) considers medical purpose as anything that treat, diagnose, cure, mitigate or prevent disease or other conditions. Modifications to these devices, unlike hardware-based devices, can be more readily implemented in response to post-market performance and safety data. FDA wants to implement a more agile regulatory paradigm for these modifications to keep up with the pace of technology development while ensuring that these devices continue to remain safe and effective for their intended use.

Our vision is that with appropriately tailored regulatory oversight, AI/ML-based SaMD will deliver safe and effective software functionality that improves the quality of care that patients receive.

In a recently released discussion paper seeking stakeholder feedback, the FDA is proposing a risk-based approach to SaMD modifications using a Total Product Lifecycle (TPLC) model.

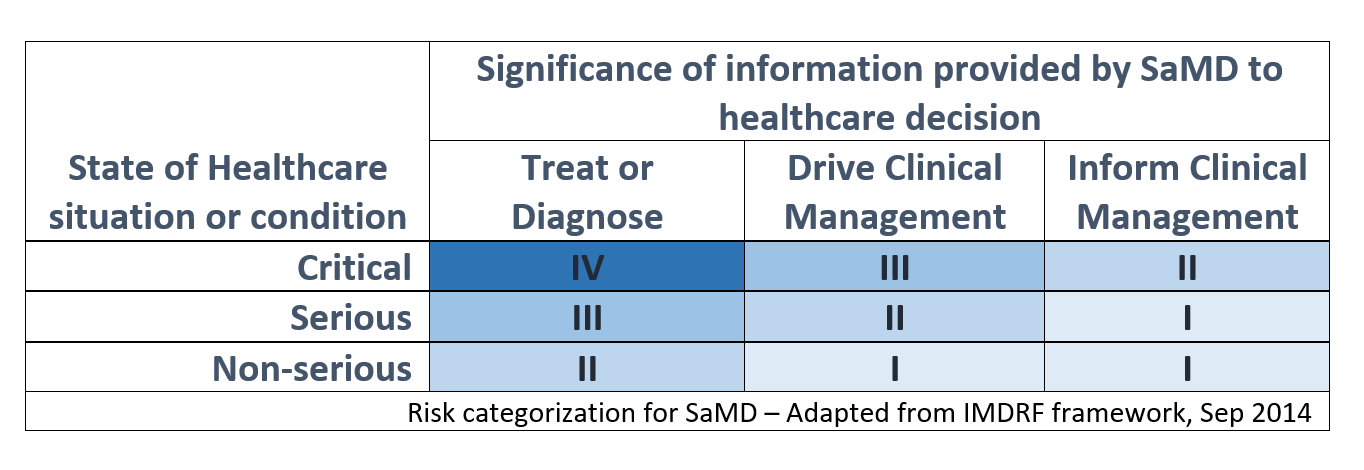

Risk categorization is based on the framework developed by the International Medical Device Regulators Forum (IMDRF), which outlines 4 risk categories from lowest (I) to highest (IV) based on the clinical situation and device use. The two dimensions of risk categorization are based on the intended use (treat/diagnose, drive clinical management or inform clinical management) and the criticality of the Healthcare situation or condition.

As an example, an AI-based SaMD which screens brain CT scans to alert a neurovascular specialist of a suspected large vessel occlusion in parallel to the routine standard of care will be considered a Category II device as it informs clinical management in a critical healthcare situation. If the same device claims to provide a diagnosis of a stroke after the proposed modification, it will be treated as a Category IV. Therefore, the definition of the intended use is important to properly assess the risk category level and should be a point of consideration in developing a regulatory submission strategy.

FDA anticipates three different types of modifications for AI-based SaMD based on changes in performance, sources of input data and intended use. As an example, an AI-based SaMD intended to drive clinical management in an intensive care unit (critical situation) based on vital signs data may be modified to reduce the rate of false alarms by improving the underlying machine learning (ML) algorithm. This will be treated as a performance improvement modification without any change in the input sources and intended use and the level of FDA scrutiny would be lower.

Alternately, it can also be modified to now predict an onset of a potential physiological event requiring immediate attention based on a predictive model. This modification will be treated as a change in the intended use beyond simply driving clinical management through an alarm and the level of FDA scrutiny would be higher.

There are three important concepts outlined in this discussion paper that provide a good understanding of FDAs approach to this agile regulatory paradigm for AI-enable SaMD.

FDA is proposing an interesting concept of a predetermined change control plan to be established at the time of the initial pre-market review. The plan would comprise of a SaMD Pre-Specification (SPS), and an Algorithm Change Protocol (ACP) or the proposed AI-based SaMD. The SPS is based on the AI model retraining and update strategy and is expected to outline the anticipated modifications to performance or inputs, or changes related to the intended use. Note the keyword anticipated, which means these modifications would need to be outlined in advance at the time of the initial pre-market review.

The ACP, on the other hand, is expected to outline specific methods the manufacturer would intend to use to achieve and appropriately control the risks of the anticipated modifications identified in the SPS. Details of data management, model re-training, performance evaluation and update procedures will be required to be pre-determined with the SPS.

A second important concept outlined in this paper is Good Machine Learning Practices (GMLP). These practices ensure that data with valid clinical association is used to build the AI mode for the SaMD, and it is expected to demonstrate both analytical and clinical validation. Quality controls around data integrity, selection of training and validation data sets, clinical validation protocols and robust acceptance criteria are now very important. In FDA’s view, a strong culture of quality, beyond the standard quality management system, and a focus on achieving organizational excellence is necessary to establish these GMLPs. Organizations who can meet these criteria will have a significant advantage through a streamlined regulatory approval process as outlined in FDAs working model of the Software Precertification Program.

The third key concept, as a component of the TPLC approach, is trust through transparency in implementation of modifications in AI-enabled SaMD through post-market real-world performance monitoring. FDAs interest are to ensure these devices remain safe and effective and that regulatory requirements for manufacturers are least burdensome. On the flip side, FDA expects manufacturers to establish trust and commit to the principles of transparency in post-market activities. Periodic reports of SaMD updates implemented as part of approved SPS and ACP, and key performance metrics will be required. Changes beyond the approved SPS and ACP may in some cases be simply documented, or in other, may need FDA review. Changes in labeling, compatibility of any impacted supporting devices, accessories or non-device components will need to be communicated to all stakeholders through appropriate mechanisms.

The framework provides in this discussion paper is not (yet) a formal guidance or requirement. FDA is seeking feedback from stakeholders before formalizing this new framework. Additional statutory authority may also be needed to fully implement this new approach.

We plan to apply our current authorities in new ways to keep up with the rapid pace of innovation and ensure the safety of these devices.

FDA Commissioner

Implications of these concepts and the proposed regulatory framework for modifications to AI-enabled SaMD are significant for manufacturers. FDA is demonstrated a considerable amount of forward thinking and a collaborative approach, consistent with what we recently wrote in our blog How Artificial Intelligence is Shaping FDA’s Thinking. If planned properly, manufacturers of these SaMD can gain a significant advantage in streamlined approvals of their devices and subsequent modifications.

References:

FDA Discussion Paper on AI based SaMD, April 2019

Statement from FDA Commissioner on a tailored review framework for AI-based Medical Devices, April 2019

IMDRF Risk Categorization Framework for SaMD, Sep 2014

FDAs Software Precertification Program, v1.0 2019